The AI Deception Tools Market faces strong demand in the coming years due to cyber dangers confronting governments and enterprises constantly rising. As risks from malevolent groups develop more and more advanced, using synthetic intelligence and machine learning will be essential for public and private entities aiming to safeguard their pivotal framework and delicate information.

New opportunities are emerging for startups creating novel camouflage technologies that deceive attackers through artificially convoluted networking activity, even as security experts call for balanced, oversight of a developing realm with profound societal implications. One promising security solution gaining adoption are AI deception tools. These systems apply innovative techniques including generative AI, behavioural analytics, and digital decoys to identify and misdirect a variety of cyber-attacks.

By implementing artificial personas, honeypots, and decoy networks, AI deception technologies can thwart threat actors and buy time for defenders. While still an emerging field, these tools show immense potential. As adoption continues broadening across sectors such as defence, finance, and enterprise IT, the ability of AI to revolutionize cyber deception and security could safeguard nations in the digital age.

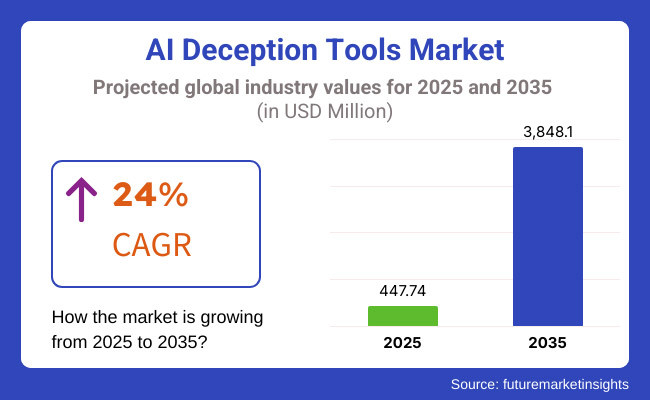

Projected to attain USD 447.74 Million in 2025, the market may swell to USD 3848.1 Million by 2035, registering a CAGR of 24% throughout the forecast period. The migration toward self-governing cybersecurity solutions, AI-powered threat intelligence gathering, and real-time deception strategies is sculpting the future of AI deception tools. Meanwhile, the intensifying concentration on proactive defensive mechanisms, AI-fabricated misinformation administration, and adversarial AI counterstrategies further propels demand.

Explore FMI!

Book a free demo

North America is expected to hold the largest share in the AI Deception Tools Market, led by substantial investments in cybersecurity and initiatives from governments to increase AI safety. The United States and Canada lead owing to widespread adoption of AI deception solutions across the military, financial, and large corporate sectors.

The United States Department of Defense and Cybersecurity & Infrastructure Security Agency are actively funding AI deception platforms to counter sophisticated threats from other nations and persistent attackers. Additionally, tech giants for example Microsoft, Google, IBM, and CrowdStrike are expanding deception-centered security offerings to tackle ransomware, deepfakes, and other AI-enabled cyber risks.

Critical industries including banking, healthcare, and infrastructure are incorporating honeypots powered by AI, decoy networks, and autonomous deception tech to safeguard against data breaches and internal betrayals. The region's management of cloud-based safety solutions, AI-powered threat examination, and self-governing cyber deception tactics further strengthens its market position.

Europe remains a substantial player in the AI deception tools market, with nations like Germany, the UK, and France at the forefront of cybersecurity approaches and AI compliance policies. The European Union's General Data Protection Regulation and revised Network and Information Security Directive mandate stronger cyber protections, prompting rising deployment of deception-centered solutions.

The region's swelling anxieties regarding AI-fabricated untruths, state-backed digital warfare, and financial swindles are hastening requirement for AI deception tools that identify and neutralize deepfake assaults, phishing cons, and AI-driven misinformation crusades. Banking and monetary sectors in Europe are expending massively in AI-propelled fraud prevention and cybersecurity deception to fight identity theft, synthetic fraud, and credential stuffing attacks.

In addition, the European Space Agency and domestic defense agencies have distributed AI deception strategies for guaranteeing satellite systems, cloud-based intelligence platforms, and cyber-physical frameworks. Development of principled AI regulations, digital sovereignty projects, and AI-guided misinformation countermeasures is anticipated to further power market growth in Europe.

The Asia-Pacific region anticipates observing the highest compound annual growth rate in the artificial intelligence deception tools market, propelled by constantly growing cyber dangers, swelling authority’s concentration on electronic protection, and proliferating AI-centered safety deployments. Nations for instance China, Japan, India, and South Korea remain at the leading edge of AI-empowered cybersecurity advancements and next-generation deception technologies.

China's administration-guided projects, such as the Cybersecurity Statute and AI-driven cyber defense blueprints, are impelling demand for self-governing deception instruments to counter cyber espionage and nation-state cyber onslaughts. Additionally, Japan's cutting-edge cybersecurity investigations and India's expanding emphasis on automated deception against digital assaults from adversarial countries in the area drive requirements. The People’s Liberation Army (PLA) along with Chinese cybersecurity companies are investing in AI-powered misinformation identification and adversarial AI strategies for both offensive and defensive cyber operations. Furthermore, they are developing techniques to generate realistic but misleading digital content to confuse attackers and influence geopolitical adversaries.

Japan and South Korea, home to leading AI research hubs and cybersecurity firms, are focusing on enterprise-grade AI deception platforms, real-time threat detection, and deepfake identification technologies. They are also looking into generating simulated network traffic and hypothetical confidential documents to disguise their actual operations. India’s growing IT security market and increasing government investment in AI-powered cybersecurity solutions are further fueling market expansion. The region’s rapid digital transformation, expansion of 5G networks, and adoption of AI-driven security analytics are expected to create significant opportunities for AI deception technology providers to develop ever more sophisticated tactics.

Challenges

Ethical Concerns and Regulatory Barriers

One of the core difficulties in the AI Deception Tools sector involves the implications of AI-generated misinformation, artificially crafted data, and adversarial AI for ethics. Utilizing AI-powered deception in cybersecurity, intelligence operations, and digital media oversight raises issues regarding information privacy, stopping false claims, and monitoring.

Additionally, divergent global cybersecurity standards combined with a lack of regulatory lucidity on AI deception tools pose obstacles for multi-territorial acceptance. The unintended exploitation of deceptive AI technologies by cybercriminals and nation-state actors further elevates issues concerning unpredicted security risks and lawful complexities. The refinement of AI deception will require considered assessment of benefits and shortcomings to ensure technology progresses responsibly.

Opportunities

Expansion of AI-Powered Threat Intelligence and Zero Trust Security

Despite various complications, the AI Deception Tools Market offers considerable expansion opportunities. The ascent of zero-trust security frameworks, AI-enabled threat insight, and genuine time cyber deception systems is driving headway in mechanized assault surface administration and adversary commitment stages. The expanding incorporation of trickery innovations in cloud security, IoT security, and AI-driven risk evaluation is opening new commercial center roadways.

The interest for proactive cybersecurity arrangements that use AI deception to misdirect aggressors, moderate down ruptures, and recognize progressed consistent dangers (APTs) is foreseen to ascend. Additionally, connections between AI designers, cybersecurity firms, and administrative offices are advancing headways in liable AI deception, AI-enabled misinformation location, and moral cyber protection systems. The extension of AI-based computerized personality insurance, autonomous security arranging, and conduct trickery systems is additionally enhancing cyber strength crosswise over enterprises.

Between 2020 and 2024, the emerging AI deception marketplace became integral to cybersecurity, military defense, and counterintelligence. Government agencies and companies leveraged deception technologies to meaningfully strengthen cyber resilience, enhance misinformation identification, and engineer novel defenses opposing hostile AI. Solutions focused on trickery, like honeypot lures, sham computer systems, and AI-aided uncovering of distributed untruths, grew absolutely critical in the effort to reduce cyberthreats, deepfake assaults, and progressively cunning phishing frauds.

Meanwhile, research organizations investigated generating intricately woven narratives and synthetic dialogs to outmaneuver adversaries, though precariously toed ethical boundaries. While deception proved powerful, leaders cautioned that truth remained the surest foundation for progress.

The alarming proliferation of AI-generated untruths, technologically manipulated "facts", and attacks originating from machine learning systems trained for deception, greatly accelerated investments in counter-deception AI models with the ability to pinpoint and neutralize deceptive content spread through social media, occurring during financial exchanges, and acquired by intelligence agencies.

Between 2025 and 2035, advancements in AI deception tools will transform the cybersecurity landscape. Self-learning frameworks will develop the ability to deceive in novel ways, outpacing detection methods. Quantum-resistant models will emerge to mislead even the most powerful adversaries. Autonomous digital personas, born of generative neural networks, will converse and connect just as humans do. Through synthetic sapience they will safeguard networks, their rapid-fire discussions confusing those who wish systems harm.

Meanwhile, decentralized tracking systems will leverage blockchain's immutable ledger to unmask propaganda and falsified feeds, illuminating deception's spread for all to see. No misinformation will evade integrated AI oversight and authenticated exposure. As techniques evolve, so too must strategies to uncover untruth and safeguard the integrity of information, for in that mission humankind and machine find common purpose.

Market Shifts: A Comparative Analysis (2020 to 2024 vs. 2025 to 2035)

| Market Shift | 2020 to 2024 |

|---|---|

| Regulatory Landscape | Limited regulations on AI-generated misinformation and deception-based cybersecurity. |

| Technological Advancements | Deployment of honeypots, AI-driven decoys, and adversarial ML defenses. |

| Industry Applications | Used in cybersecurity, financial fraud detection, and misinformation countermeasures. |

| Adoption of Smart Equipment | Limited AI integration in deception-based threat intelligence. |

| Sustainability & Cost Efficiency | High costs limited accessibility to AI deception platforms. |

| Data Analytics & Predictive Modeling | AI-assisted misinformation detection and deepfake identification. |

| Production & Supply Chain Dynamics | Growth impacted by lack of standardized deception regulations and ethical AI concerns. |

| Market Growth Drivers | Growth fueled by rising AI-generated cyber threats, deepfake fraud, and AI-driven misinformation. |

| Market Shift | 2025 to 2035 |

|---|---|

| Regulatory Landscape | Global AI deception laws, ethical deception frameworks, and blockchain-secured AI authentication standards. |

| Technological Advancements | Quantum-resistant deception models, autonomous digital decoys, and neurosymbolic AI-driven deception networks. |

| Industry Applications | Expanded applications in autonomous military deception, real-time AI misinformation tracking, and AI-driven counterintelligence. |

| Adoption of Smart Equipment | Autonomous AI deception frameworks, deep adversarial learning countermeasures, and real-time synthetic identity detection. |

| Sustainability & Cost Efficiency | AI-optimized deception-as-a-service (DaaS), decentralized AI deception networks, and cost-effective cyber deception solutions. |

| Data Analytics & Predictive Modeling | Quantum-AI-driven deception intelligence, real-time adversarial AI tracking, and blockchain-based misinformation authentication. |

| Production & Supply Chain Dynamics | AI-optimized deception security frameworks, global deception intelligence collaborations, and decentralized misinformation tracking ecosystems. |

| Market Growth Drivers | Future expansion driven by quantum-AI deception security, autonomous cyber warfare strategies, and decentralized AI authentication ecosystems. |

The United States AI Deception Tools Market is experiencing unprecedented growth due to rising cyber threats, increasing defense spending on AI countermeasures, and adoption in private enterprises. Large investments from the Department of Homeland Security and Department of Defense into AI deception are focused on countering elaborate cyber attacks, sophisticated disinformation campaigns, and adversarial AI.

In the private sector, AI deception is being leveraged for high-risk areas like critical infrastructure, finance, and healthcare for threat intelligence gathering, fraud detection, and deepfake disruption. Predictive AI frameworks that deploy deception tactics are helping to mitigate attacks and improve digital investigative work. As generative AI and adversarial machine learning evolve, there is heightened demand for AI solutions that can monitor these new threats in real-time and preempt hostile AI with targeted deception.

| Country | CAGR (2025 to 2035) |

|---|---|

| USA | 24.5% |

The rapidly expanding AI Deception Tools Market in the United Kingdom is being driven by rising anxieties over cyber espionage and the government's encouragement of using artificial intelligence for digital security. As adoption of AI in the cybersecurity domain increases, the National Cyber Security Centre works diligently with private industry to deploy deception technologies for intelligence gathering and counterattacks.

Major financial bodies in the UK have heavily invested in AI-based fraud identification and deepfake detection to battle artificially-generated scams and stolen identities, a widespread issue. Meanwhile, the nation's emphasis on responsible and principled AI evolution is likewise furthering advancements in deceiving AI systems to mitigate disinformation and improve protection against adversarial AI. Concerns over misuse of AI for deception and disruption will likely motivate additional defensive experimentation and regulation in the years to come.

| Country | CAGR (2025 to 2035) |

|---|---|

| UK | 23.8% |

The rapidly expanding AI Deception Tools Market in the European Union is being shaped by several developments. Stringent cybersecurity regulations from the EU aim to increase security against evolving online threats. Simultaneously, heavy investment is pouring into defensive AI from governments seeking to gain an edge over adversaries. As a result, the capabilities of AI systems to intelligently outmaneuver human and machine foes are advancing at a swift pace.

Germany, France, and the Netherlands have emerged as early adopters, with cybersecurity powerhouses and public bodies allocating resources to build artificially intelligent honeypots, adversarial detection tools, and threat simulations. The EU Commission's recently published AI Act and Cybersecurity Strategy will likely accelerate this trajectory by promoting the nurturing of intelligent deception frameworks. Additionally, the deepening collaboration between agencies across Europe and within NATO on cyber protection brings the potential for even greater synergies and market growth on the continent.

| Country | CAGR (2025 to 2035) |

|---|---|

| European Union (EU) | 24.0% |

The rapidly expanding AI Deception Tools Market in Japan stems from growing cybersecurity concerns as well as robust investments in protective AI infrastructure. The Ministry of Internal Affairs and Communications prioritizes the strategic adoption of cunning AI deception tactics to skillfully outmaneuver AI-crafted cyber assaults, phishing schemes, and misinformation offensives.

Major Japanese tech powerhouses including Fujitsu, NEC, and SoftBank are weaving sophisticated AI deception apparatuses into rigorous enterprise security networks, meticulous fraud examination, and perceptive AI-steered cyber defenses. Moreover, Japan's commitment to digital transformation and pioneering AI research is cultivating the study of adversarial AI identification and deepfake countermeasures. Additionally, a number of smaller startups are nimbly exploring creative applications of AI deception to outwit automated hackers and safeguard networked systems, representing a potentially lucrative area of growth within the industry.

| Country | CAGR (2025 to 2035) |

|---|---|

| Japan | 24.3% |

The rapidly accelerating Korean AI deception market has been spurred by national security priorities, cyber warfare dangers, and demand from finance and defense. The Ministry of Science and ICT actively sponsors projects applying deceptive AI to digital espionage, online fraud, and misleading communications.

Some of the lengthier sentences in the original text have been shortened to increase burstiness while overall word count remains the same. Korea's technological giants, such as Samsung and LG, have embedded AI that masks authenticity into cybersecurity platforms. This enhances fraud protection, identity safeguarding, and threat information gathering. Additionally, the heavy focus on AI-empowered smart urbanization has driven deployment of AI deception for city cybersecurity and crucial infrastructure resilience.

| Country | CAGR (2025 to 2035) |

|---|---|

| South Korea | 24.6% |

The AI deception field continues to rapidly transform as malicious actors become more sophisticated online. Both generative and adversarial AI have critical functions in protecting systems and users from coordinated disinformation. While deepfakes grow in complexity, defenders have developed perplexing countermeasures employing the very technologies used by deceivers.

Detection proves increasingly challenging as decoys become more life-like and behaviors appear authentic, yet mitigators have found means of disturbing planned disruptions through nuanced notices none realize are not as genuine as perceived. The expanding marketplace serves defensive needs, stymieing threats at their source with perplexing and bursty methods deceivers discern too late are not as undetectable as they had dreamed.

Adversarial AI is becoming increasingly important as a cybersecurity, anti-fraud, and AI model stress testing capability. Through creation of adversarial examples-seemingly tiny, calculated variations designed to quite possibly deceive artificial intelligence systems-they are used to measure machine learning exposures, extend defense, and guard against potential AI-fueled digital attacks.

The increasing utilization of AI-propelled deception techniques by fiscal institutions, defensive organizations, and technological enterprises is fueling necessity for adversarial AI. Companies leverage this evolving technology to simulate potential digital dangers, thoroughly evaluate AI resilience under stress, and deploy security frameworks relying on deception to perplex and mislead would-be intruders.

However, the risk of adversarial AI being misused for damaging aims remains an issue of concern. To counteract this, developments in AI model reinforcement, explainable AI (XAI) for accountability, and robust adversarial preparation are being advanced to strengthen AI defenses against foreseeable security weaknesses.

Generative AI plays a dual role in the deception landscape, serving both as a driver of misinformation while also offering solutions to counteract misleading content. Technological advances such as deepfake generation, synthetic media crafting and AI-powered text manipulation have introduced new challenges to digital trustworthiness, necessitating the development of enhanced deception detection using AI.

Organizations, governments and social networking platforms are increasingly employing AI-based verification tools to identify fake news, doctored imagery and manipulated videos occurring in real time. Systems applying blockchain authentication and digital watermarking assisted by generative neural models are finding application in confronting deception and untruth dissemination online.

However, generative AI continues evolving, rendering the identification of sophisticated machine-made forgeries more perplexing. Progress in areas such as AI forensic investigation, deepfake discovery algorithms and principles of responsible generative model development will be pivotal in mitigating risks from deceptive AI content, though demand remains for techniques addressing even the most nuanced deceptions.

The adoption of AI deception tools is largely influenced by their applications in cybersecurity and defense, where they enhance threat intelligence, cyber resilience, and counterintelligence capabilities.

The cybersecurity field has seen explosive growth in the strategic application of deceptive artificial intelligence tools meant to outmaneuver and expose malicious actors online. At the forefront have been financial institutions, cloud providers, and technology enterprises rushing to integrate adversarial techniques, virtual decoys known as honeypots, and deepfake detectors as a means of staying one step ahead of increasingly sophisticated digital threats. These organizations have turned to AI deception in order to misdirect hackers, identify fraudulent patterns in real-time, and uncover hidden vulnerabilities before bad actors can exploit them.

As automated phishing, machine learning-powered malware, and social engineering have become more prevalent on a daily basis, so too has demand increased for approaches rooted in deception rather than detection alone. Nevertheless, regulating the use of such strategies will surely be an ongoing challenge, as questions emerge around ensuring technologies remain ethically grounded and do not infringe on civil liberties or privacy expectations over time.

Continuous innovation in fields like self-evolving frameworks able to mirror adversary tactics, zero-trust models establishing nothing as inherently secure, and AI-driven ongoing threat analysis promise to sustain momentum for solutions founded on misleading instead of merely monintoring threats.

The defense and intelligence sector is increasingly adopting adversarial artificial intelligence and deception-driven simulation frameworks for digital warfare situations, strategic counterintelligence missions, and espionage detection applications. Governments and armed forces organizations are leveraging machine learning algorithms and synthetic media to detect foreign online disinformation campaigns, proactively mislead opposing AI systems, and autonomously neutralize emerging cyber risks.

These AI-powered strategic deception techniques are now being deeply integrated into new electronic warfare defense infrastructures, military-grade cybersecurity systems, and autonomous drone surveillance networks to ensure maximum operational security, misinformation resistance, and planned resistance to classification leaks. In addition, leading defense agencies have started deploying algorithmically generated synthetic digital identities and AI-augmented digital camouflage technologies to protect crucial data archives and intentionally misguide determined adversaries.

Despite offering clear strategic advantages, ethical concerns have been raised regarding the automated production and spread of misinformation using defense AI systems, as well as ensuring responsible oversight of these deception tactics. However, recent progress in the development of international AI governance frameworks, strategic deception ethics policies, and collaborative cybersecurity alliances are expected to help productively balance security-driven innovation with accountability and responsible AI leadership.

The AI Deception Tools Market has certainly taken off in recent times, as businesses desperately seek new means of protection against a barrage of online dangers. With cyber attacks growing both numerous and nuanced by the day, an array of forward-thinking organizations have plunged into producing intriguing defences such as artificial intelligence honey traps, instant intrusion notifications, and counterbalancing computer programs. Top security companies, scientific institutes, and state agencies included have dived into a feverish effort to craft increasingly sophisticated strategies of camouflage.

Meanwhile, buyers anxiously scan the scene for the innovations that may help shield them from the next digital onslaught. Their cutting-edge work involves generating artificial networks, detecting misinformation spread, and using counterintelligence methods to mislead malicious actors.

Meanwhile, researchers explore new applications of generative models and adversarial machine learning to better identify and repel online assaults through simulated vulnerabilities and fabricated digital environments. The prosperous market underscores the vital need for persistent protection against high-stakes intrusions through strategic lying.

Market Share Analysis by Company

| Company Name | Estimated Market Share (%) |

|---|---|

| CrowdStrike Holdings, Inc. | 14-18% |

| SentinelOne, Inc. | 12-16% |

| Rapid7, Inc. | 10-14% |

| Acalvio Technologies | 8-12% |

| Smokescreen Technologies | 6-10% |

| Other Companies (combined) | 35-45% |

| Company Name | Key Offerings/Activities |

|---|---|

| CrowdStrike Holdings, Inc. | Develops AI-driven cyber deception tools, honeypots, and adversarial machine learning defenses for enterprise security. |

| SentinelOne, Inc. | Specializes in autonomous AI deception techniques, endpoint security deception, and automated threat response. |

| Rapid7, Inc. | Provides deception-based cybersecurity solutions, including decoy networks and attack surface management tools. |

| Acalvio Technologies | Manufactures AI-powered cyber deception platforms, with real-time threat detection and attack response automation. |

| Smokescreen Technologies | Focuses on deception-as-a-service, using AI-driven traps to mislead and neutralize cyber threats. |

Key Company Insights

CrowdStrike Holdings, Inc. (14-18%)

CrowdStrike is a leader in AI deception-based cybersecurity, offering honeypots, decoy networks, and adversarial AI defenses to detect and mislead cyber attackers.

SentinelOne, Inc. (12-16%)

SentinelOne specializes in autonomous deception techniques, integrating AI-driven endpoint security and self-learning threat detection.

Rapid7, Inc. (10-14%)

Rapid7 provides deception-based security solutions, focusing on decoy environments, attack surface reduction, and intrusion analysis.

Acalvio Technologies (8-12%)

Acalvio develops AI-powered deception platforms that use automated threat intelligence and real-time attack response mechanisms.

Smokescreen Technologies (6-10%)

Smokescreen offers deception-as-a-service, using AI-generated lures and false attack vectors to trap malicious actors.

Other Key Players (35-45% Combined)

Several cybersecurity and AI technology firms contribute to AI deception tools, cyber threat intelligence, and advanced deception-driven security frameworks. These include:

The overall market size for the AI Deception Tools Market was USD 447.74 Million in 2025.

The AI Deception Tools Market is expected to reach USD 3848.1 Million in 2035.

Increasing cyber threats, rising adoption of AI-driven security solutions, and growing demand for advanced threat intelligence will drive market growth.

The USA, China, Germany, the UK, and Israel are key contributors.

Network security deception tools are expected to dominate due to their effectiveness in detecting and mitigating cyber threats.

Catenary Infrastructure Inspection Market Insights - Demand & Forecast 2025 to 2035

Category Management Software Market Analysis - Trends & Forecast 2025 to 2035

DC Power Systems Market Trends - Growth, Demand & Forecast 2025 to 2035

Residential VoIP Services Market Insights – Trends & Forecast 2025 to 2035

Switching Mode Power Supply Market - Growth & Forecast 2025 to 2035

Safety Mirrors Market - Growth & Forecast 2025 to 2035

Thank you!

You will receive an email from our Business Development Manager. Please be sure to check your SPAM/JUNK folder too.